I believe the central problem of the 21st century is how civilization co-evolves with science and technology. As our understanding of the world deepens, it enables technologies that confer ever more power to both improve and damage the world. There's currently much public discussion of this in the context of artificial superintelligence (ASI). However, it's a fundamental recurring challenge, affecting areas including climate change, genetic engineering, nanotechnology, geoengineering, brain-computer interfaces, and many more. How can we best benefit from science and technology, while supplying safety sufficient to ensure a healthy, flourishing civilization?

This essay emerged from a personal crisis. From 2011 through 2015 much of my work focused on artificial intelligence. But in the second half of the 2010s I began doubting the wisdom of such work, despite the enormous creative opportunity. For alongside AI's promise is a vast and, to me, all-too-plausible downside: that the genie of science and technology, having granted humanity many mostly wonderful wishes, may grant us the traditional deadly final wish. And as I grappled with that, I began seriously considering whether science and technology creates other existential risks (xrisks). Do we have the ideas and institutions to avert such risks? If not, should I be working on science and technology at all? And what should we collectively be doing about such risk?

This internal conflict has been unpleasant, sometimes gut-wrenching. As a scientist and technologist, my work is not just a career, it is a core part of my identity, intertwined with fundamental personality traits: curiosity, imagination, and a drive to understand and create. I have devoted my life to science. If pursuing science and technology is a grave mistake, then how should I change my life? I began considering changing careers or becoming an anti-AI activist. I also considered more symbolic actions, like taking offline my book about neural networks. But such actions seemed short-sighted and reflexive, not informed by a deep investigation of the underlying issues.

While these questions are personal, to answer them well one needs a big-picture view of how humanity should meet the challenges posed by science and technology, and especially by ASI. This essay attempts to develop such a big-picture view. It's an informal technical essay, long and discursive, a report on partial progress; an ideal public-facing version would be much shorter and more focused. But I needed to metabolize past thinking on the subject, and that's meant a lot of exploration. The value in retracing old paths is that it makes clear crucial questions that, when reconsidered, lead to novel ideas. The essay extends and complements, but may be read independently of, my earlier work on xrisk, particularly "Notes on Existential Risk from Artificial Superintelligence", "Notes on the Vulnerable World Hypothesis", and "Notes on Differential Technological Development".

For progress there is no cure… We can specify only the human qualities required: patience, flexibility, intelligence. – John von Neumann, in "Can We Survive Technology?"

Knowledge itself is power. – Francis Bacon, in "Meditationes Sacrae"

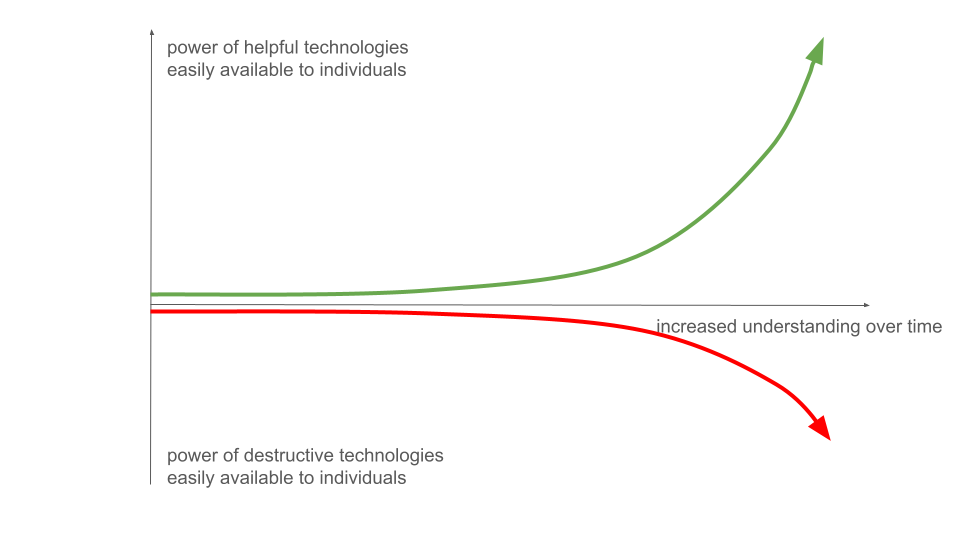

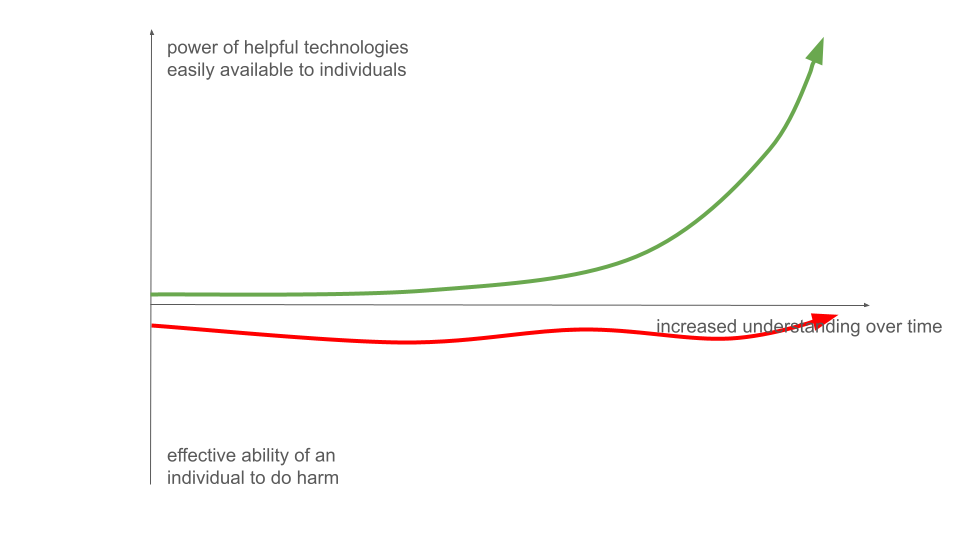

Let's begin with a simple heuristic model illustrating how science and technology impact the world. The underlying idea was stated in the preface: as humanity understands the world more deeply, that understanding leads to more powerful technologies, which give ordinary individuals1 and small groups more capability to impact the world, for good and ill2:

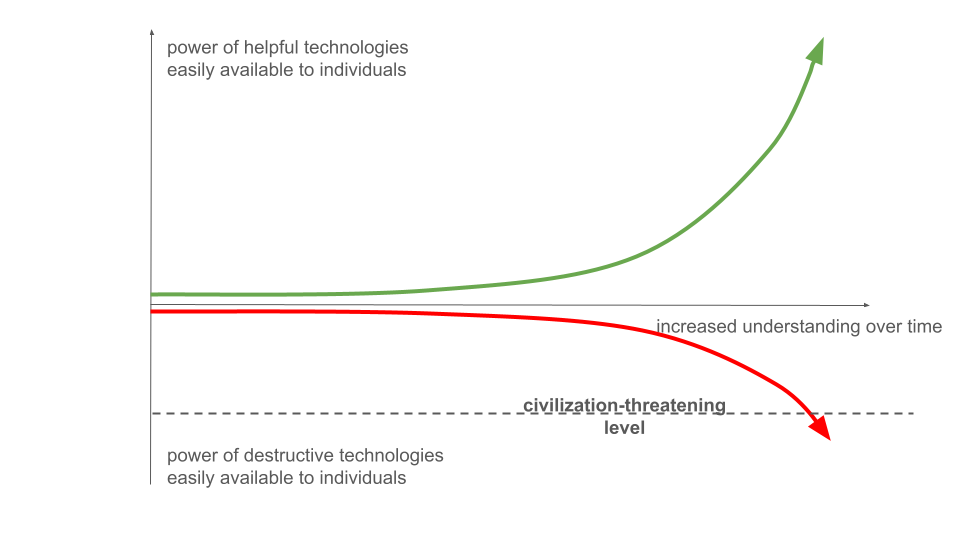

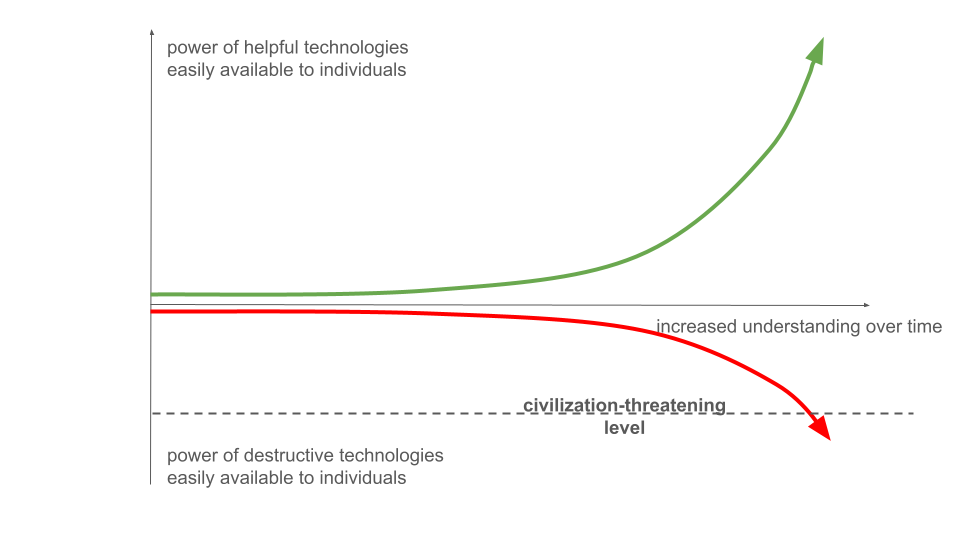

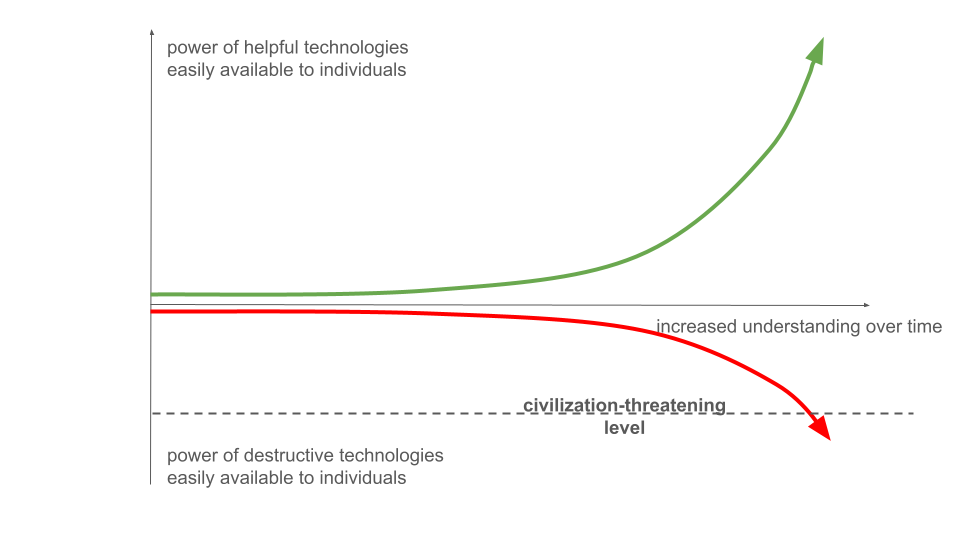

The positive curve gives us wonderful, life-enabling abilities. The negative curve destroys. Fortunately, today this destructive potential is limited. When someone like Anders Behring Breivik kills 77 people we grieve and we may alter laws and policing, but such an event is not a threat to humanity. However, it raises the question: as technology continues to advance, how much more damage could a single deranged individual or small group inflict? In the 1980s and 1990s the Aum Shinrikyo doomsday cult sought to develop or acquire weapons to kill nearly all humans; developing such weapons today is likely far easier than during Aum Shinrikyo's time. Although Aum Shinrikyo is gone, doomsday cults remain a threat3. Is humanity gradually developing tools that will democratize Armageddon? Does the heuristic graph above escalate to a civilization-ending level of dangerous technology?

This possibility has been considered many times throughout the history of science and technology, but it has become particularly contentious recently, with the rise of interest in AI, AGI, and ASI. Many thoughtful people are now warning of what they see as potential existential risk (xrisk)4 from ASI. Meanwhile, skeptics complain that the supposed risks sound like low-quality science fiction – entertaining stories, perhaps, but not well argued or likely to occur in reality. Understandably, they want to hear detailed, plausible scenarios before they take such xrisks seriously. On the other side: (a) there is a prudent caution about developing such scenarios in detail, lest it help bring about those very outcomes; and (b) we should, by definition, expect to fail to anticipate how an ASI would be capable of acting, since it will perceive possibilities we do not. It's a situation that seems difficult to resolve, except through enactment of an actual catastrophe.

I won't make a detailed argument for xrisk from ASI in this essay. Arguments both for and against have been discussed extensively elsewhere, and I refer you there5. I also haven't yet explicitly defined AI, AGI, or ASI. I discuss how I'm using the terms in the Appendix, but briefly: by "AGI", I mean a system capable of rapidly learning to perform comparably to, or better than, an intelligent human being, at nearly all intellectual tasks human beings do, broadly construed. By "ASI" I mean a system capable of rapidly learning to perform far better than an intelligent human being at nearly all intellectual tasks human beings do, broadly construed, subject to the constraints that "better" be reasonably well-defined, and humans are not already near the ceiling. (So noughts and crosses is out as a test, and somewhat subjective tasks like writing poetry are in a grey zone.) I intend no implication that today's LLMs are either close to or far from AGI. While I have opinions on that subject, they're weakly held and not relevant to this essay. I say this because sometimes when people talk about AGI or ASI, others change the premise, responding with "But LLMs can't […]" This is like making a claim about rockets, and having it "refuted" by someone discussing the properties of bicycles. Of course, if you believe we are many decades away from achieving AGI, then many concerns in this essay won't be of immediate interest. Personally, my weakly-held guess is that ASI is, absent strong action or disaster, most likely one to three decades away, with a considerable chance it is either sooner or later. "AI" I shall not use as a technical term at all, but rather as a catchall denoting the broad sphere of activities associated to developing AGI- or ASI-like systems.

While the plausibility of xrisk scenarios is disputed, there are some striking broad pathways toward catastrophic risk. Briefly6, let me list a few: easily constructed nuclear weapons, perhaps inspired by one of the Taylor-Zimmerman-Phillips designs7; easily-constructed antimatter bombs; destructive self-replicating nanobots – while the notion of "grey goo" is sometimes ridiculed, something like grey goo has happened at least twice on earth (the origin of life, and the great oxygenation event); large-scale computer security compromise, leading to failures or takeover of crucial systems (electric grid, banking, the supply chain, the nuclear strike capability, and so on). And then there's the risk many see as most imminent: biorisk, small groups deliberately or accidentally creating or discovering pathogens far more devastating than COVID-19. Unfortunately, this gets easier every year. Consider the accidental(!) lethalization of mousepox achieved in 2004 (and later essentially perfected, even against vaccinated mice)8. This has led to concern a similar mortality rate could be achieved in human smallpox through a similar modification. All these risks illustrate how, as we understand the universe more deeply, that understanding enables us to build more powerful capabilities into our technologies. This is a destabilizing situation, placing ever more power in the hands of ordinary individuals.

All these threat models are of concern, even if you ignore ASI. And I expect many more risks will be discovered in the decades to come. As mentioned in the preface, over the past few years I've increasingly felt uneasy about all my technical projects – science and technology itself seems to be the fundamental threat, not just ASI, and anything contributing to science and technology has come to seem more questionable. In 1978, Carl Sagan9 speculated that technical civilizations may inevitably kill themselves, although he holds out hope that not all is lost:

Of course, not all scientists accept the notion that other advanced civilizations exist. A few who have speculated on this subject lately are asking: if extraterrestrial intelligence is abundant, why have we not already seen its manifestations?… Why have these beings not restructured the entire Galaxy for their convenience?… Why are they not here? The temptation is to deduce that there are at most only a few advanced extraterrestrial civilizations – either because we are one of the first technical civilizations to have emerged, or because it is the fate of all such civilizations to destroy themselves before they are much further along.

It seems to me that such despair is quite premature.

In a similar vein, in 1955 John von Neumann wrote an essay10 whose title encapsulates the core concern: "Can we survive technology?" Although his concrete concerns mostly involve nuclear weapons and climate control – what today we'd call geoengineering – much of the argument applies in general, across technology, and von Neumann intended a general argument. Note von Neumann repeating one of the lessons of Prometheus and of the Sorcerer's Apprentice: the inextricable link between the positive and negative consequences of technology:

What could be done, of course, is no index to what should be done… the very techniques that create the dangers and the instabilities are in themselves useful, or closely related to the useful. In fact, the more useful they could be, the more unstabilizing their effects can also be. It is not a particular perverse destructiveness of one particular invention that creates danger. Technological power, technological efficiency as such, is an ambivalent achievement. Its danger is intrinsic… useful and harmful techniques lie everywhere so close together that it is never possible to separate the lions from the lambs… What safeguard remains? Apparently only day-to-day – or perhaps year-to-year – opportunistic measures, a long sequence of small, correct decisions… the crisis is due to the rapidity of progress, to the probable further acceleration thereof and to the reaching of certain critical relationships… Specifically, the effects that we are now beginning to produce… affect the earth as an entity. Hence, further acceleration can no longer be absorbed as in the past by an extension of the area of operations. Under present conditions it is unreasonable to expect a novel cure-all. For progress there is no cure. Any attempt to find automatically safe channels for the present explosive variety of progress must lead to frustration. The only safety possible is relative, and it lies in an intelligent exercise of day-to-day judgement… these transformations are not a priori predictable and… most contemporary “first guesses” concerning them are wrong… The one solid fact is that the difficulties are due to an evolution that, while useful and constructive, is also dangerous. Can we produce the required adjustments with the necessary speed? The most hopeful answer is that the human species has been subjected to similar tests before and seems to have a congenital ability to come through, after varying amounts of trouble. To ask in advance for a complete recipe would be unreasonable. We can specify only the human qualities required: patience, flexibility, intelligence.

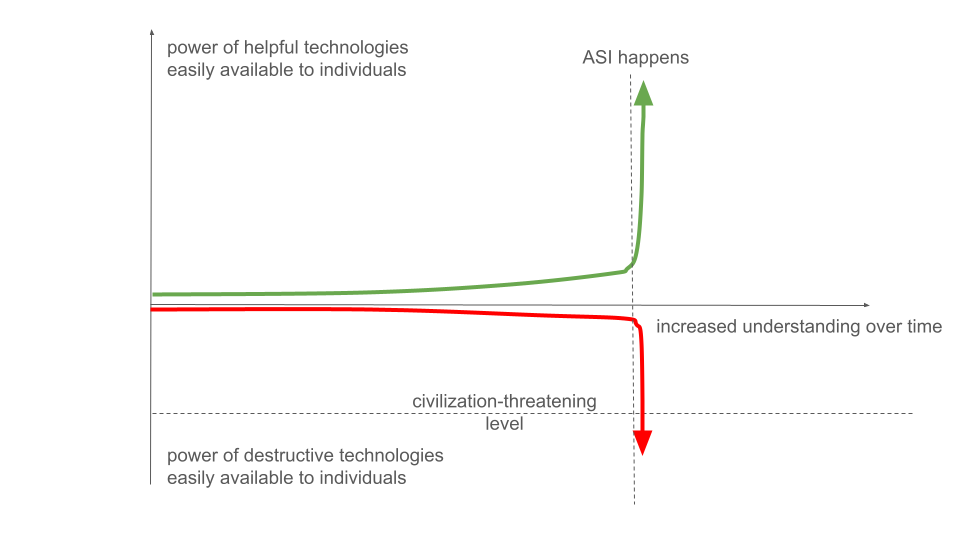

This is a remarkable sequence of thoughts from one of humanity's greatest scientists and technologists. And, as the passage argues, much of the problem is intrinsic to science and technology broadly, not just ASI. However, ASI adds two challenges which make the concern much more urgent. First, it may accelerate the entire process, creating a kink in the heuristic curve illustrated above:

That is, ASI may hasten the day when an ordinary person or small group may misuse science and technology to cause catastrophic harm. Second, ASI may itself become a threat11, leading to a takeover by the machines (or a machine). This rogue ASI-taking-over-the-world model is much-discussed12, and sometimes taken to be the principal concern about ASI. However, a more important question is: how much power does the ASI system confer, either to its user, or to itself? An entirely obedient ("aligned") but extraordinarily powerful system could do a great deal of damage to humanity, if it was doing the bidding of a Vladimir Putin or Aum Shinrikyo. It doesn't matter so much whether the ASI has an operator or operates itself. People sometimes convince themselves that rogue scenarios won't happen, and therefore ASI will be okay. This conclusion is wrong, since the fundamental issue isn't control, it's how much power is conferred by ASI. To repeat von Neumann: "Technological power, technological efficiency as such, is an ambivalent achievement. Its danger is intrinsic." As a simple example of why this matters: some people believe technologies such as BCI and neurotech and intelligence augmentation will help address threats from ASI. Perhaps they may help against a rogue ASI, but they may also create novel risks for misuse, and thus have the opposite of the hoped-for effect.

(Why would ASI greatly speed up science and technology? Some people find this self-evident; others regard it as unlikely. I've discussed this in my "Notes on Existential Risk from Artificial Superintelligence", and will only make some brief remarks here. One objection comes from people who deny the premise of ASI, treating "ASI" as though it means "glorified LLM", not a system capable of superhuman intellectual performance in nearly all domains. This is a common enough objection to be worth mentioning, but it is not worth arguing. A more substantive objection is that scientific and technological progress is bottlenecked by factors other than intelligence. In this view, an army of super-Einsteins may help surprisingly little. It's sometimes pointed out that many people who believe ASI will cause a dramatic acceleration have little background directly contributing to science and technology, and don't always realize the extent to which science is not merely deduced from first principles; it depends upon a great many contingent facts in the world which must be observed, some over long timescales. Such bottlenecks can be reduced – robots could, for example, gather data on a much larger and faster scale than humans13. But all such bottlenecks can't be completely eliminated. My belief, discussed in my notes on existential risk, is that ASI will remove many of today's bottlenecks, greatly speeding up science and technology; however, new bottlenecks will arise (and may be obviated by later systems14).)

So: while my broad concern is the risk posed by science and technology, if ASI were not on the horizon, I'd likely put that concern aside, and leave it for future generations. We humans have, as von Neumann observes, done surprisingly well addressing the problems created by science and technology. But ASI makes this more questionable, especially if it speeds up the rate at which such problems are created. So for the remainder of this essay I focus on the impact of ASI, though I occasionally make remarks in the broader context. I have kept the title "How to be a wise optimist about science and technology?", since that framing is both important in its own right, and provides a powerful way of thinking about ASI in particular.

Let us consider again the horizontal line at which ordinary individuals or small groups can develop technologies posing catastrophic risk to humanity:

This is equivalent to the question of whether we will develop recipes for ruin15. Meaning: simple, easy-to-follow recipes that create catastrophic risk. Or in more detail: will we ever discover a simple, inexpensive, easy-to-follow recipe that an individual or small group with a typical education and typical resources can follow, which will do catastrophic damage to humanity16? Recipes for very large scale, cheap-and-easy antimatter bombs would fit the bill, for instance, or cheap-and-easy deadly pandemics.

Fortunately, today we don't know of any such recipe for ruin. But that doesn't mean none will ever be discovered. Many technologies that now seem familiar were once unimaginable. Consider how surprising human-initiated nuclear chain reactions must have seemed to the people who discovered them. Or, going back much further in history, how surprising fire would have been to people seeing it for the first time17. Neither nuclear bombs nor fires are recipes for ruin, but both have some characteristics of that flavour, and both must initially have been very surprising. Admiral William Leahy, Chief of Staff of the US armed forces at the end of World War II, called the atomic bomb project "the biggest fool thing we have ever done. The bomb will never go off, and I speak as an expert in explosives." This quote is sometimes used to illustrate the dangers of a lack of imagination; in fact, Leahy was both imaginative and technically competent. He had taught physics and chemistry at Annapolis, and knew the navy's weapons well. A better lesson is perhaps that the Hiroshima bomb seems a priori implausible: take two inert bodies of material –- each small enough to safely be carried by a single human being –- and bring them together in just the right way. If you'd never heard of nuclear weapons, it would seem obviously impossible that they'd explode with city-destroying force. You need to understand a tremendous amount about the world –- about relativity, particle physics, and exponential growth in branching processes –- before it is plausible. That novel and very non-obvious understanding revealed a latent possibility for immense destruction, one almost entirely unsuspected a few decades earlier. Similarly, it is entirely plausible that recipes for ruin may one day be discovered, and the only barrier is our current level of understanding18.

My experience is that the people who (like me) worry about xrisk from ASI (and, more broadly, from science and technology) are also those who instinctively believe recipes for ruin are likely to one day be discovered. It suggests it's just a matter of time. People who instinctively don't believe recipes for ruin are ever likely to be discovered are much more likely to be dismissive of xrisk. Here, for instance, is John Carmack refusing to consider the question. Perhaps this is motivated reasoning on Carmack's part, but he's too thoughtful and intellectually honest for me to believe that's likely. I suspect it's because different people acquire different bundles of intuition from their past experience, particularly their past expert training. Those bundles of intuition take thousands of hours to acquire, and vary greatly for different types of expertise – one is acquiring an entire expert subculture. And different bundles of intuition lead to very different conclusions about whether recipes for ruin are ever likely to be discovered. I suspect, for instance, this is why many economists don't find xrisk compelling – compared to a physicist or chemist they have very little feeling for how much potential lies hidden in the physical world, just as physicists and chemists often have poorly-developed intuitions about economics and scarcity.

Of course, it's reasonable to demand a detailed argument to support a claimed threat, not intuition from a subculture! Szilard and Einstein didn't go to FDR with an intuition about nuclear weapons, or tell him he needed to become a physicist; they went with detailed claims about which materials would be fissionable and why; how this would lead to a runaway process and to explosive force; what this had to do with German trade policy; and what American institutions should do. However, as I've discussed in detail elsewhere19, and mentioned above, there are intrinsic reasons it's hard to reason about the presence or absence of ASI xrisk in this way: (1) any pathway to xrisk which we can presently describe in detail doesn't require superhuman intelligence to discover; (2) any sufficiently strong argument for xrisk will likely alter human actions in ways that avert xrisk; and (3) the most direct way to make a strong argument for xrisk is to convincingly describe a detailed concrete pathway to extinction, but most people tend (naturally and wisely) to be hesitant to develop or share such scenarios. These three "persuasion paradoxes", especially the first, present a barrier to reasoning about risks from ASI in the usual ways.

All that said, my opinion is: if discovery continues to unfold under our existing institutions of technocratic capitalism, then recipes for ruin are likely to one day be discovered. I further believe ASI will likely greatly hasten the discovery of such recipes. I won't make a detailed argument – that would require conveying an entire culture, and would dwarf the rest of the essay. I do want to say a little about variations of the idea. A crucial question is: are recipes for ruin inevitable, given sufficient advances in science and technology20? Some people take this to be a question about the laws of nature – you might think it's simply a property of the universe. But it's more complex, since whether something is a recipe for ruin depends upon both: (1) the ambient environment (e.g., the presence or absence of some population level of immunity to a pathogen that would be deadly to some populations, and a non-event for others); and (2) the way in which we develop the technology tree21 (e.g., whether an effective missile shield is developed before or after large-scale multipolar deployment of ICBMs). With that said: there may be dominant recipes for ruin, that is, recipes for ruin which are extremely difficult to defend against, no matter the environment and other defensive technologies. If there's a cheap-and-easy recipe to create a large black hole on the surface of the Earth, then it seems likely the only protection is to suppress those branches of science and technology leading to such a recipe. Now, I'm not lying at night worrying about this particular example: I find such a recipe logically possible, but implausible. But what about some form of grey goo which is easily-created and hard-to-defend against? Unfortunately, that seems plausible and difficult to defend except by pre-empting development. It's happened before in the history of the earth, and we're not so far from being able to do it again. If it's easy to discover dominant recipes for ruin then that would certainly help explain why we don't see evidence of intelligent life writ large in the stars.

You may or may not accept those intuitions. As I said, whether you do will likely depend on your prior expertise. But intuition aside, for the remainder of the essay I will take as a basic premise that ASIs are likely to hasten the discovery of recipes for ruin, including recipes dominant against defenses; they can then be misused either by malevolent human or machine actors, creating xrisk for the human race. If you can't accept this premise, even for the sake of argument, then you won't find much of interest in this essay.

This line of thought made me deeply pessimistic for some time. But then, prompted by Catherine Olsson, I began to focus on the question: is it possible to be optimistic, even if you believe recipes for ruin may well be discovered by an ASI? Maybe even recipes dominant against plausible defensive systems? These questions matter because the future is made by optimists: they're the people with the vision and drive to act. In particular: good futures tend to be made by wise optimists, whereas bad futures are made by foolish optimists. For most of my life I've believed working to accelerate science and technology was the path of wise optimism. I'm now struggling with whether that belief was wise or, in fact, foolish. At the same time, I don't want fear of recipes for ruin to drive me into pessimism, an xrisk despondency trap22. There's no good future in that. Rather, I want to develop a wise optimism. This won't be an easy optimism – pessimism seems easier in response to a belief in recipes for ruin – but perhaps with enough active imagination it's possible to develop an optimistic point of view.

The basic intuition of the opening section was that science and technology keep increasing individual power, and this seems likely to eventually lead to individual humans (or individual machines) having enough power to destroy civilization. A problem with the heuristic is that over the past four centuries, just as science and technology has most increased individual power, we've also seen a gradual drop in interpersonal violence, and a general increase in safety, broadly construed23. Is the Armageddon heuristic wrong?

One common explanation for the ongoing increase in safety is that it's because of gradually increasing co-ordination and empathy and shared pro-social norms and institutions. The increase in effectiveness of these social technologies has actually outweighed dangers caused by the increase in individual power: humans have done a fantastic job increasing the supply of safety in the world. Even when very dangerous new technologies are introduced we find ways of ameliorating much or all of the danger. Perhaps that's how the heuristic model in the last section fails? Maybe there's some notion of the effective ability of an individual to do harm, and that hasn't changed so much; maybe it's gone up a little at times, gone down at others, without ever exploding:

If you extrapolate out, this suggests a sort of long-run Kumbaya love-is-the-answer24 Teilhardian approach to ASI safety. Maybe we will go through a kind of phase transition25, in which individual sentient beings come to love one another – or, at least, co-operate with one another – so much that they no longer harm one another in serious ways.

While I've used over-the-top language for clarity26, it's only a slight caricature of the past few centuries. If you'd explained to William the Conqueror and Genghis Khan the state of the world in 2024 they would have been very surprised at how peaceful we are in co-existence. Yes, there are still terrible wars and violence. But detailed analyses show that violence has diminished a great deal. It's one of the glories of our civilization that our present institutions supply safety as well as they do. Most people today are simply much, much safer than people of former times. This gradual change has some similarities to the much earlier evolutionary transition in which individual cells stopped competing with one another, and instead all joined figurative hands to become multicellular organisms. AI researcher David Bloomin posed something like this to me as his basic picture of how ASI safety will happen. And Ilya Sutskever seems to believe something related: for a long time his Twitter biography read "towards a plurality of humanity loving AGIs". I don't think that's quite the right framing, but it's worth pondering, and I'll consider a variation later in this essay. Of course, it's going to take enormous additional ingenuity to supply sufficient safety in an ASI future; absent that, we may instead live (and then die) in the Armageddon future.

In this viewpoint, good questions about the progress of science and technology are: what controls the supply of safety? When does our species do a good job of supplying safety well? When do we supply it poorly? Are there systematic ways in which it is undersupplied? Are there systematic fixes which can be applied to address any systematic undersupply? Can we supply safety without causing stagnation? Do we need to modify existing institutions or develop new institutions to achieve this? These are core questions. I won't address them immediately: we're in too passive, too reactive a frame. But we will return to them later, from within a more active frame.

You may object that "Oh, using 'safety' as an abstract quantitative noun in this way is a category error, likely to be misleading." In 1989, Alan Kay made a pointed joke about a similar (mis?)use of the term "interface"27: "Companies are finally understanding that interfaces matter, but aren't yet sure whether to order interface by the pound or by the yard." A similar joke may reasonably be made about my usage of "safety" – should we order it by the pound or by the yard? But I think it's helpful, albeit requires some care, to view safety in this aggregated way28: it makes the point that safety is something that can be systematically over- or under-supplied by society's institutions29, and challenges us to think about what the right systems are for supplying it. This is a crucial mindset shift.

There's obviously tension between the Kumbaya and Armageddon heuristics. In public discussion of ASI one way that tension is expressed is through disagreement between two prominent groups: (1) the "accels" (sometimes misleadingly called "techno-optimists"), who believe we should accelerate work on ASI, and who are xrisk denialists, since they deny or minimize xrisk from ASI; and (2) the "decels" (or "doomers"), who believe ASI xrisk is considerable, and who are pessimistic about any solution apart from stopping development of ASI.

It's tempting to think these two groups correspond to the two heuristic models I just introduced: the accels believe the Kumbaya heuristic model, while the decels believe the Armageddon heuristic model. However, the situation is more complex than that. In particular, many of the accels not only believe the Kumbaya heuristic, they treat it as near inevitable. They mostly don't worry about the supply of safety – I've seen some celebrate the decimation of the safety team at OpenAI in 2024, for instance. They treat safety as easy, someone else's problem, something to free ride on, while the accels ride ASI to their own assumed glory. By contrast some decels not only believe the Armageddon heuristic, they are pessimistic that much can be done about it except slowing down or reversing our progress in understanding.

I am making broad assertions here about rapidly-changing, heterogeneous and sometimes incohesive social movements. "Accels believe this, decels believe that". My description is a coarse approximation; in reality it's "many accels believe this, many decels believe that". In any case social reality often changes rapidly, so what is true today may be false in a year. Still, these simple caricatures contain much truth, at the time of writing. Furthermore, regardless of how well (or poorly) this describes social reality, the primary reason I am discussing this is to highlight these two sets of distinct assumptions. Teasing apart those underlying assumptions will help us formulate alternative points of view.

In part because the accel-vs-decel classification has become so prominent, many people assume the only way to be optimistic about the future is to deny xrisk. I've heard many conversations which contain variants on: "Oh, you worry about xrisk, you're one of those pessimists". This assumption is not only false, it's actively misleading. It's entirely possible to believe there is considerable xrisk and to be optimistic. In Part 3 of this essay we'll develop a point of view which accepts that there is considerable xrisk from science and technology, but then asks: how can we develop a truly optimistic response? This is a wise optimism which understands that you don't get good futures either by denying risks or by responding fearfully and pessimistically. Rather, you get good futures by deeply understanding and internalizing risks, then taking an active, optimistic stance to overcome them. It's a wise optimism that understands the tremendous achievement the Kumbaya heuristic graph represents, and takes seriously the enormous additional ingenuity that will be needed to supply sufficient safety in an ASI future. While that's a much harder and more demanding optimism, over the long run it's far more likely to result in good outcomes.

By contrast, while the accel xrisk denialists style themselves as optimists, if there really is xrisk then theirs is a foolish optimism, one likely to lead to catastrophe. It's like someone diagnosed with cancer deciding that they're going to "choose optimism", deny the cancer, and carry on as though nothing is wrong. These are the ideological descendants of those who brought us what they maintained were the gifts of asbestos and leaded gasoline.

The "wisely optimistic" view we'll develop is related to views held by some AI safety researchers at AGI-oriented companies. But as we'll see it goes further than is common in focusing not only on the safety of AI systems, but also on: (a) non-market aspects of safety which the market either won't supply or is likely to oppose; and (b) aspects of safety which aren't interior to the technologies being built, but are distributed through society and, indeed, the entire ambient environment, up to and including the laws of nature. We'll call this point of view coceleration, since it involves the acceleration of both safety (very broadly construed) and capabilities. As we'll see, it isn't an easy optimism, and requires tremendous active imagination and understanding. But it is plausibly the path of wise optimism.

Man is a very small thing, and the night is large, and full of wonder. – Lord Dunsany

So far we've focused on framing the problems facing humanity: how to ensure safety amid the progress of science and technology? We'll return to that in Part 3. I want now to switch to a future-focused imaginative mindset, one where we ask: what is the opportunity in the future? In, say, 20 or 50 or 100 or 200 years? Can we imagine truly wonderful futures, insanely great futures, futures worth fighting for?

Of course, imagining our future is a task for our entire civilization, not a short text! But we can at least briefly evoke a few points in the space of future possibilities. This is not a prediction of what will happen; it is rather an evocation of what we might potentially make happen, a few points on an endless canvas that can only be filled out by further imagination and an incredible amount of work. Nor is it a utopia: there's no narrative, much less a strong underlying theory of development. Nor will I justify the possibilities described, or even describe them in much detail. This is a limitation: all the possibilities below require immersion in extended discussion to really understand, let alone to comprehend the implications. However, the intent here is to forcefully remind myself (and readers) that we are, as David Deutsch has so memorably put it, at "the beginning of infinity". While the space of possibilities is far larger than we currently imagine, it's worth recalling just how large the space is that we currently can imagine!

Many prior sketches of the optimistic possibilities of the future have been written. I won't attempt a survey – this can't be both an evocation of possibility and an academic list of citations – but I want to note an ASI-adjacent surge of such sketches recently, from people including OpenAI CEO Sam Altman, Anthropic CEO Dario Amodei, the philosopher Nick Bostrom, and tech investor Marc Andreessen. While these are not disinterested observers, they are welcome in that understanding the threat posed by science and technology requires also a strong sense of the possibility of the future30.

So: what might we see in the future, given that we're still at the beginning of infinity? Imagine an incredible abundance31 of energy, of life, of intelligence, but most of all of meaning32, far beyond today. Imagine it as a profusion of new types of qualia, new types of experience, new types of aesthetic, and new types of consciousness, as different to (and as much richer than) a human mind as a human mind is different to a rock. Imagine it as a profusion of new types of identity and new types of personality. Imagine it as new ways of changing, merging, fracturing, refactoring identity and personality. Imagine it as wildly expanded notions of intelligence, of consciousness, of ability to be and to act and to love, in a million different flavours. Imagine it as a multitude of new ways to co-operate and co-ordinate and come to agreement, far beyond any individual.

Imagine it as ASI, BCI, uploads, augmentation, uplifting, new cognitive tools, immune computer interfaces33, metagenome computer interfaces. Imagine it as a profusion of new phases of matter, with properties currently inconceivable, the creation of phases of matter as a true design discipline, fully programmable and with a rich, composable design language. Imagine it as universal constructors, femtotechnology, utility fogs, a shift from uncovering the rules underlying how matter works to, again, a design point of view, in which we use those rules to make matter fully programmable. Imagine it as abstractions to describe entirely new types of matter, an interface direct to the laws of physics, of chemistry, of biology, and all the levels beyond. Imagine it as a billion new types of object and material and actions and affordances in the world34.

Imagine it as an end to hunger, an end to suffering, an end to apathy and ennui, an end to unnecessary illness and death, an end to the agony of aging and decay. Imagine it as sentience through all of space and into the far-distant future.

Imagine it as a loving plurality of posthumanities.

We humans currently use an infinitesimal fraction of the available energy, mass, space, time, and information resources. We use, for example, about 200 Petawatt Hours of energy each year; the sun outputs more than ten trillion times as much energy, about 3 million billion Petawatt Hours each year. The solar system is vast beyond our comprehension. And yet it is a tiny speck in our galaxy, itself a tiny speck in the universe as a whole.

The physical world is immense.

Imagine that immense physical world as our playground for the future, something we can gradually learn to harness as the basis for imaginative design.

In the biological world, we are (again) near the very beginning. We have, for example, only just attained a basic understanding of a tiny handful of well-studied proteins, such as hemoglobin and kinesin. And yet billions of proteins have been identified in nature, a smorgasbord of molecular machines, containing myriad secrets of both principle and practice. It's as though we've just stumbled upon an incredibly advanced alien microscopic industrial civilization, which we now get to study and learn from.

The biological world is immense.

Imagine that immense biological world as our playground for the future, something we can gradually learn to harness as the basis for imaginative design.

Barring a dramatic slowdown, I believe the 21st century will see our transition to a posthuman world35. I hope it will be a world where sentience blooms into myriad forms, coexisting harmoniously in a state of loving grace. And, as a partisan of humanity, an optimistic vision does not mean a world without humans, but rather one including human beings as well as many new types of sentience. What astonishing possibilities are there in such a world? How can we imagine and move toward such a world of abundance?

In this section I've eschewed the language of prediction, focusing instead on imagination and possibility. This contrasts with much AI safety discourse, which is often framed in terms of timelines, probabilities of doom, prediction markets, and so on. I've avoided this predictive viewpoint in part because it's the passive view of an outsider, not a protagonist; and in part because it de-centers imagination. ASI isn't something that happens to us; we control it. Timelines are something we are collectively deciding. To do that well we must see ourselves as active imaginative participants, not merely passive or reactive respondents. The predictive viewpoint should serve the imaginative viewpoint, not vice versa. That's why I've deliberately centered the imaginative viewpoint: imagination is more fundamental than prediction. If people such as Alan Turing and I. J. Good hadn't imagined AGI and ASI we wouldn't be discussing predictions related to them. Imagining the future well is both extraordinarily challenging and an extraordinary opportunity.

Reflecting on this section, I feel four sources of discontent. First, the speculative, impressionistic style lacks concrete detail. However, it is not enough to worry about safety and disaster; to understand those well we must also understand the optimistic possibilities (and vice versa). Still, while the evocation is (necessarily) not in-depth, I believe it's useful as a starting point for more in-depth consideration. Second, the section is too focused on technology and material conditions, with no rich evocation of new possibilities for experience or meaning or social organization. Those are at least as important as technology and the material world, but even harder to imagine. Third, I emphasize technology as a means of improving humanity's lot; some people see progress instead in a retreat from technology, making space for different values and forms of abundance36. I've emphasized the pro-technology approach in this section because the remainder of the essay takes a more critical stance. Fourth, in writing this section I am frustrated my own poverty of imagination. Humanity truly is at the beginning of infinity, but how dimly we perceive it! Imagining the future well is hard work, and our design discipline of the future is still nascent. We have devoted insufficient rigorous effort to imagining posthuman futures, resulting in a meager shared posthuman canon. We must change this for posthumanity to go well.

If our shared vision of the opportunity of the future is impoverished, then how can we develop a richer shared vision of the future? In this section I explore one approach to this problem. It's tangential to the main line of the essay, and the section may be skipped without affecting the main argument. Let me start elliptically. If you go to certain parties in San Francisco, you meet hundreds (and eventually thousands) of people obsessed by AGI. They talk about it constantly, entertain theories about it, start companies working toward it, and generally orient their lives around it. For some of those people it is one of the most important entities in their lives. And what makes this striking is that AGI doesn't exist. They are organizing their lives around an entity which is currently imaginary; it merely exists as a shared social reality.

Where did this imaginary entity originate? While I won't give a detailed history, it's the imaginative product of many minds. The 1950s were particularly fertile, the time when Alan Turing wrote his famous paper introducing his test for machine intelligence. It's the time when John McCarthy coined the term "Artificial Intelligence". It's when McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon ran the first workshop on AI. It's a little after Isaac Asimov began to write his stories about artificially intelligent robots. And, of course, long after stories like Mary Shelley's "Frankenstein", Samuel Butler's "Erewhon", and other similar progenitors.

Some of those people built simple prototype systems – Shannon, for example, built a machine to play a simplified version of chess. But their work was largely conceptual. They used their imagination and understanding to invent hypothetical future objects – things they believed could exist, but which didn't at the time. Indeed, not only did those hypothetical objects not exist, these people had only a dim idea of how they could be built. But they saw the broad possibility, and described it concretely enough to make it a viable goal. Subsequent decades saw many small steps toward that goal made by scientists. In parallel it also saw a tremendous amount of imaginative science fiction, fleshing out and exploring what AI could mean.

I use the term hyper-entity37 to mean an imagined hypothetical future object or class of objects. AI is just one of many hyper-entities; closely related examples include AGI, ASI, aligned ASI systems, mind uploads, and BCI. Outside AI, examples of hyper-entities38 include: world government, a city on Mars, utility fog, universal quantum computers, molecular assemblers, prediction markets, dynabooks, cryonic preservation, anyonic quasiparticles, space elevators, topological quantum computers, and carbon removal and sequestration technology39. Even on-the-nose jokes like the Torment Nexus are examples of hyper-entities. Many important objects in our world began as hyper-entities – things like heavier-than-air flying machines, lasers, computers, contraceptive pills, international law, and networked hypertext systems. All were sketched years, decades, or even centuries before we knew how to make them. But they ceased to be hyper-entities when they were actually created, sometimes rather differently than was expected by the people who originally imagined them. By contrast, things like dragons or unicorns, while imagined objects, are not examples of hyper-entities, since they aren't usually considered to be future objects. There are related hyper-entities, though: "genetically engineered dragon" is an example.

The most interesting hyper-entities often require both tremendous design imagination and tremendous depth of scientific understanding to conceive. But once they've been imagined, people can become invested in bringing them into existence. Crucially, they can become shared visions. That makes hyper-entities important co-ordination mechanisms. The reason AGI is a subject of current discussion is that the benefits of AGI-the-hyper-entity have come to seem so compelling that enormous networks of power and expertise have formed to bring it into the world. It's become a shared social reality. This is a common pattern with successful hyper-entities. While still imaginary, they may exert far more force than many real objects do40. As a result, the futures we can imagine and achieve are strongly influenced by the available supply of hyper-entities. This makes the supply of hyper-entities extremely important: they determine what we can think about together; they are one of the most effective ways to intervene in a system; a healthy supply of hyper-entities helps pull us into good futures. If all we imagine is bad futures that's likely what we'll get41.

To see how much this matters, it's worth pondering what would happen if as much effort was applied to other hyper-entities as is currently being applied to AGI. I don't just mean money; I mean work by a multitude of brilliant, driven, imaginative people, each of whom believe ASI is going to happen, and is working hard to find some special edge. And this has mobilized tens of billions of dollars and enormous ingenuity to the task. What if the same level of social belief – and thence of ingenuity – was brought to bear on, say, immune computer interfaces? Or cryonics? I believe progress on those hyper-entities would dramatically speed up; they might well become inevitable. In this sense, shared belief is the most valuable accelerant of AGI today. And we can flip this around and ask: what if AGI became as socially unpopular as (say) the Vietnam War became in its later stages? What if it became more unpopular than the Vietnam War? It seems likely that, at the least, AGI would be considerably delayed.

The term hyper-entity is related to the notion of a hyperstition, introduced by the accelerationist philosopher Nick Land in the 1990s42. As summarized by Wikipedia, hyperstitions are ideas that, by their very existence as ideas, bring about their own reality, often through capitalism43, though sometimes through other means. In a 2009 interview, Land describes a hyperstition as:

a positive feedback circuit including culture as a component. It can be defined as the experimental (techno-)science of self-fulfilling prophecies. Superstitions are merely false beliefs, but hyperstitions – by their very existence as ideas –- function causally to bring about their own reality. Capitalist economics is extremely sensitive to hyperstition, where confidence acts as an effective tonic, and inversely. The (fictional) idea of Cyberspace contributed to the influx of investment that rapidly converted it into a technosocial reality. Abrahamic Monotheism is also highly potent as a hyperstitional engine. By treating Jerusalem as a holy city with a special world-historic destiny, for example, it has ensured the cultural and political investment that makes this assertion into a truth… The hyperstitional object is no mere figment of ‘social constuction [ sic ]’, but it is in a very real way ‘conjured’ into being by the approach taken to it.

Of course, an object may be both a hyperstition and a hyper-entity. However, Land's notion of hyperstition is focused on how it is socially and culturally self-realized: as the -stition suffix indicates, it's primarily a belief. For us in our concern with hyper-entities, while belief and self-realization are interesting possibilities they are not central. A hyper-entity may be strongly socially self-realizing, or not at all. Rather, we are focusing on the design properties – what the hyper-entity can do, and how it can do it, not on the social means by which it is realized. This perhaps seems more quotidian, but I believe the history of technology shows that often it is the design properties that are most interesting – the new affordances a hyper-entity enables – rather than the means of realization. As an example: the concept of a universal assembler is an extremely interesting hyper-entity, but (currently) it is arguable whether it could reasonably be called a hyperstition.

Where do new hyper-entities originate? There are a few common sources: basic science; design; futurism or foresight studies; science fiction; venture capital and the startup ecosystem44. My impression – I haven't done a detailed study – is that all make some contribution. The most common pipeline seems to be: basic science → (not always, but sometimes crucially) science fiction → academic science or hobbyist communities → investment-backed startups. Design, futurism and foresight studies play relatively smaller roles, perhaps because they're more oriented toward client work, and as a result rarely engage as deeply with basic science.

Many important hyper-entities originate in what I have called vision papers45. This includes universal computers, AGI, hypertext systems, quantum computers, and many more. Vision papers are a curious beast. They typically violate the normal standards for progress in the fields from which they come; they often contain no data; no hard results of the type standard in their fields; rather, they merely imagine a possibility and explore it. Alan Turing's paper on AI is a classic of the genre. It's often difficult to write followups to such papers; such followups tend to feel like speculation piled upon speculation. As a result of these properties, vision papers are surprisingly uncommon. The response of scientists to such papers is sometimes "why don't you do some real work, not this abstract philosophizing?" It's tempting to vilify academic science for this response, but much of the power of academic fields comes from their ability to impose (field-specific) standards for what it means to make incremental progress. Those field-specific standards of progress are extraordinarily precious, some of humanity's most significant possessions, and vision papers often violate those standards. It seems to me that creating high-prestige venues for such vision papers would potentially substantially increase their supply, while preserving the standards of the underlying fields. This might mean a journal of vision papers; it might mean something like a Vision Prize. It is also interesting to consider creating an anti-prize, perhaps the Thomas Midgeley Prize, to be "awarded" to the individuals whose invention has most damaged civilization.

Science fiction is sometimes mentioned as a place where hyper-entities originate. The most commonly cited example is likely Arthur C. Clarke on geosynchronous satellites, though in fact Clarke was popularizing an idea proposed earlier by Herman Potočnik. A still clearer example is Vernor Vinge on the Singularity; while Vinge did not originate the notion of the Singularity, he did write the deepest early essays and stories about the idea. Much of the most valuable exploration and development of many hyper-entities – including ASI, BCI, and many more – has been done through science fiction. The present essay has benefited: it would be much impoverished in a world without Butler's Erewhon, Asimov's Robot stories, the works of Vernor Vinge, and many more.

However, science fiction has two major problems as a source of hyper-entities. One problem is that it tends to focus on what is narratively plausible and entertaining; neither of these results in good hyper-entity design. It is, for example, very easy and often narratively useful to write about a "faster-than light drive" or a "perpetual motion machine"; unfortunately, that ease-of-writing does not necessarily correspond to ease-or-even-possibility-of-existence. Good hyper-entity design requires both deep insight into what is possible, and also checking against extant scientific principles. This kind of checking is done in first-rate vision papers; but it doesn't make for good narrative, nor is it usually regarded as entertaining, and so it's not typically demanded by the conventions of science fiction.

A second problem with science fiction as a source of hyper-entities is that science fiction authors are often not deeply grounded in the relevant science. They just won't have the depth of background to invent something like anyonic quasiparticles or universal computers. Such discoveries emerged out of truly extraordinary insight into how the world works. And insofar as nature is more imaginative than we, we should expect far greater opportunities to lie latent within such an understanding of nature than anywhere else. For that reason, I expect basic science will remain the deepest source of hyper-entities.

What would a serious practice of hyper-entity design look like? It would be deeply grounded in science. It would ultimately look for falsifiability and check against known principles, not narrative plausibility or entertainment. It would look for powerful new actions, powerful new object-subject relationships. It would be especially oriented toward discovering fundamental new primitive objects, affordances, and actions. In this it would be similar to interface and programming language design, but broadly across all the sciences, not constrained to the digital realm. This kind of imaginative design of new fundamental primitives is shockingly hard; in my admittedly limited experience46, it makes theoretical physics seem easy. Finally, such a discipline would be connected to a pipeline of later development.

I began this section with the question: how could we develop a much richer shared vision of the future? That question was motivated by the relative poverty of vision in the last section. As far as I can see, we have almost no serious discipline of imagining the future. What were people like James Madison, Alan Turing, and Jane Jacobs doing when they imagined the future? How to imagine good as opposed to bad futures? Some will object that there are already serious or at least nascent disciplines associated to predicting, forecasting, and influencing the future. That's fair enough, but it seems to me that when it comes to imagining the future we're much less serious. And, as emphasized in the last section, all those other activities are downstream of that act of imagination, since that's how we both reason about and co-ordinate to make the future47.

While I don't know how to imagine good futures, I do know that hyper-entities are a piece of the future we have experience in supplying. They're sufficiently narrow that they can be reasoned about in detail, and can be the focus of large-scale co-ordination efforts. I believe we can aspire to develop a good design discipline of hyper-entities, one which helps us imagine better futures (including safer futures). I think we're a long way from such a design discipline, but it's a worthy aspiration, and would help in expanding our conception of possible positive posthuman futures. And ideas like a vision journal or Vision Prize or Midgeley Prize would potentially help48.

I sometimes speak with naive builders – often engineers or startup founders – who think of hyper-entities as somehow "obvious". That's true of some hyper-entities, but it's not true of many of the most important; a concept like ASI seems obvious today, due to familiarity, but was not obvious historically. A related misconception is that all the good hyper-entities were conceived by builders. That's not even close to true. Sometimes, the conception of a new type of object and the implementation come from the same person or group – I believe this was true of the scanning tunnelling microscope, for instance. But very often a Tim Berners-Lee needs a Ted Nelson, or multiple Ted Nelsons, to come before them, preparing the conceptual path49. The skills required to imagine hyper-entities in the first place are often very different from those required to build them. David Deutsch was an excellent person to imagine quantum computers; he is not at all the right person to build them, as he is happy to acknowledge. Ditto Bose, Einstein, and Bose-Einstein Condensation. Or Alan Turing and Artificial Intelligence.

Relatedly, I've often encountered self-styled AI builders who pooh-pooh conceptual work, especially the conceptual work done by much of the AI safety community. "A bunch of bloggers". "Wordcels". "It's all just talk and academic handwringing, none of it results in real systems". "The future belongs to those who build!" "Nothing has come out of all that philosophy or Less Wrong stuff, it's all from real hackers / companies". "A bias to action!" And when you talk more to those builders, they mention ASI, and timelines, and FOOM, and alignment, and compute overhang, and slow takeoffs, and multipolar worlds, and the vulnerable world, and what it means to have a good Singularity. They live inside a conceptual universe that has been defined in considerable part by many of the people they deride. Indeed, they often even forget that AGI is a concept out of the imagination of Alan Turing and a few others – including people like Nick Bostrom – conceived in work of the kind they dismiss. But it's so compelling that they've become caught in that construct. It's a kind of builders' myopia. One is reminded of Keynes in a very different context: "Practical men, who believe themselves to be quite exempt from any intellectual influences, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back…. it is ideas, not vested interests, which are dangerous for good or evil." Of course, AI-related hyper-entity design isn't exactly coincident with what the AI safety community has been doing, but there is significant overlap. And deep conceptual work aimed at imagining good futures is of the utmost importance to achieving good futures.

In Part 2, I attempted to evoke a few mostly-positive elements of a posthuman future. Of course, as discussed in Part 1, important new technologies often create major disruptions, sometimes even catastrophes: consider the discovery of bronze; of iron; of agriculture; of democracy; of gunpowder; of the printing press; of social media; of added sugar in food; of asbestos; of oil; of aircraft; of nuclear weapons. Each caused major problems (and some had major positive consequences too). Whether the elements of a posthuman future which I have evoked are healthy depends upon our ability to adapt to the problems they create.

One approach to addressing these issues is through the concept of Differential Technological Development (DTD). Informally, this means ensuring defensive technologies progress more rapidly relative to offensive. A fuller 2019 definition by Bostrom50 reads as follows: "Retard the development of dangerous and harmful technologies, especially ones that raise the level of existential risk; and accelerate the development of beneficial technologies, especially those that reduce the existential risks posed by nature or by other technologies." It can be difficult to determine which technologies are harmful and which are beneficial, but this definition is at the least a useful conceptual step.

The Alignment Problem was introduced51 in the context of artificial intelligence. But a similar problem applies more generally to science and technology, and I recently52 gave a more general formulation: "the problem of aligning a civilization so it ensures differential technological development, while preserving liberal values". For good futures, civilization must continually solve the Alignment Problem, over and over and over again; it must always be supplying safety sufficient to the power of the technologies that it develops. It's not a problem to be solved just once, but rather a systematic, ongoing problem that requires continual solution. Indeed, even if a society of ASIs arose that wiped out all human beings, that society would itself still face the Alignment Problem. Violence and concentration of power would still be likely to tear such a world apart. The obvious point to wonder about in the Terminator movies is: even if the machines win, what prevents them from turning on one another? Without strong moderating influences, they will self-extinguish. The extent to which any society, human, non-human, or mixed, is able to survive and flourish is determined by the quality of its solution to the Alignment Problem.

There are many challenges in making the terms introduced above precise: how can we determine which technologies are dangerous or offensive; which technologies are defensive or beneficial; what does it mean to supply safety; what count as liberal values53; what does it mean to absolutely prioritize? And so on. I have addressed some of these questions in small part in earlier work54, but the bulk of the issue remains. With that said: for early-stage conceptual work, it's often useful to leave terms relatively loosely bound; as you apply them in practice you can iteratively improve the quality of the definitions55. This requires getting into the details of many concrete examples. For now, for conceptual sketching, we will leave the terms loosely bound.

Fundamental questions related to the Alignment Problem are: what systems does our civilization use to supply safety? In what ways is safety systematically undersupplied? How well are we solving the Alignment Problem? A certain kind of free market believer tends to believe safety will be well supplied by the market as new technologies are introduced. This is well illustrated by partial failures of the point. For instance: the world's first commercial jet aircraft was the de Havilland Comet. Three Comet aircraft crashed in the first year; unsurprisingly, sales never recovered, and the Comet was discontinued. However, other companies developing jet aircraft paid close attention, and learned to make much safer aircraft. While the crashes of the Comet were a tragedy, the rapid adaptation of the market was healthy. In cases like these there is a strong and rapid safety loop operating: when risks are borne immediately and very legibly by the consumer, the market is well aligned with safety, and supplies it well. Put another way: capitalism amplifies safety when dangers immediately and visibly impact the consumer, as in the case of the Comet. In this view it is unsurprising modern airlines are extraordinary paragons of safety. Indeed, in many markets anticipation of this feedback effect helps ensure that products are very safe even before the first version gets to market; the analogue of the Comet crashes never even happens in such cases. This has happened in many markets (often with a regulatory assist), from food to toys and even to the early AI models: techniques such as RLHF, Constitutional AI, and the work coming out of the AI Ethics and Fairness community are all examples of the safety loop operating, in part due to market incentives.

By contrast, when no consumer is bearing an obvious immediate cost, the market often supplies safety much less well. Markets often amplify technology which seems to benefit consumers, but has large hard-to-see downsides, sometimes borne collectively, sometimes borne in hard-or-slow-to-see ways by consumers. Examples include CO2 emissions, asbestos, added sugar in food, leaded oil, the pollution caused by internal combustion engines, CFCs, privacy-violating technologies, and many, many more. This tends to happen when the risks involve: externalities; long timelines; are illegible or hard to measure; are borne diffusely, perhaps damaging the commons; when it is hard to establish property rights in the harm; damage to norms or values. Those of an economic mindset sometimes simplify this down to "externalities", but this is an oversimplification. However, insofar as collective safety can be (imperfectly) modeled as a public good, we'd expect it to be undersupplied by the market.

(I cannot resist a digression on the fascinating case of CFCs, from which much can be learned. Early refrigerators used ammonia as a refrigerant; this sometimes leaked, killing people. As a result, manufacturers switched to CFCs, an instance of the market working well to reduce immediate risks. Unfortunately, the switch caused a much less legible risk to the commons – damage to the ozone hole – which took decades to understand. This was then resolved by collective action in the form of the Vienna Convention and then the Montreal Protocol, which mandated a worldwide switch from CFCs to HFCs. However, while this collective action is mostly the result of government and NGO action, it had a fascinating and important market component: Du Pont was the world's largest maker of CFCs, and might well have opposed the switch, but after some dithering announced that they supported it. This was in their self-interest, albeit in part because of considerable foresight for which they deserve credit: they'd begun a search for CFC substitutes more than a decade earlier, and were poised to also become a major player in the new market for HFCs. Other manufacturers fell into line. It's as though Saudi Aramco and Exxon-Mobil were set to become the world's largest renewable energy companies, due to their foresight and commitment to renewable energy. It's interesting to speculate on what would have happened had the switch away from CFCs been opposed by Du Pont and others. The Vienna Convention and Montreal Protocol were exceptionally successful – the first treaties ratified by every UN State. Would that have been the case if Du Pont had strongly opposed HFCs?)

In general, we will define the non-market parts of safety as those which are not in short-term commercial interests to supply. A simple example is that seatbelt laws were, for a long time, opposed by many automobile companies. Many of the biggest challenges our civilization has faced (or faces) are examples of cases where the non-market parts of safety dominate. When I was growing up, teachers told me I'd likely die due to: nuclear war; climate change; acid rain; the ozone hole; or one of several other ailments. What unites these ailments is that they didn't have obvious market solutions. Sometimes it was because they were largely outside the market (e.g., nuclear weapons). And sometimes it was because in the short term, the market was actually contributing to such problems, e.g., people burning oil as they drive cars has contributed to climate change. This makes the situation seem quite hopeless. Fortunately, in each case we've found ways to build bespoke non-market mechanisms to close the safety loop. And, slow as it might seem, we've made considerable (though often not enough) progress on nuclear war, pollution, climate, acid rain, the ozone hole, and so on.

Achievements like the nuclear non-proliferation treaty, the Clean Air Act and its analogues in non-US countries, and the Vienna Convention and Montreal Protocol are among humanity's greatest achievements. There's a striking common basis for the safety loop in each case: the worse the anticipated threat – a constructed collective epistemic state56 – the better humanity is able to respond. Many major (and minor) problems have been solved this way; it is arguable that this kind of safety is among our greatest social technologies. It's easy to take for granted, but notable that other animals can't operate such a safety loop. But while it's good we have made progress, our response to problems like nuclear war or climate change has not yet been sufficient. And it becomes worse with things like ASI where there are enormous barriers to agreeing on the threat. Indeed, we're beginning to see some (though not all) market actors deliberately sow doubt about the threat, not due to reasonable doubts, but due to their self-interest. In this it mirrors climate and many of the other examples above. As we'll see in the next section, while we should expect market forces do a good job of supplying some kinds of safety related to ASI, for many others it means organizations acting against their own short-term interest. To continue solving the Alignment Problem we desperately need institutions which do a better job supplying safety.

I've been describing the Alignment Problem as a collective societal issue. It may also be given an individual framing. A society tends to get what it rewards with success; it must therefore be careful what it rewards with success57. This suggests the Alignment Problem for Individuals: achieving a society in which individuals pursuing their own "success" tend also to be contributing to the good of that society58. Of course, "individual success" and "good for society" will never be more than partially aligned; we won't ever fully solve the Alignment Problem for Individuals. But much of the improvement in a civilization comes from better aligning the two, and continuing to align the two. Ideally, even sociopaths acting entirely in their own self-interest will serve the common good; wise, intelligent pro-social people will be especially successful. This was one of Adam Smith's most extraordinary insights – that people acting in their own self-interest in a market economy may also serve the common good. Indeed, this is the primary justification for today's market economy – a point sometimes forgotten or not acknowledged by free market maximalists59. AGI places significant pressure on this alignment: starting or joining an AGI startup is certainly in individuals' self-interest, but may be against our civilization's interest. I believe that if we can solve the Alignment Problem for Individuals, then all else will follow. This is yet another reason to care about problems like climate and too much wealth inequality: they are failures to solve the Alignment Problem for Individuals, and institutional solutions which address those are likely to help address the Alignment Problem for Individuals more broadly60.

In the last section I discussed alignment as the general problem of humanity (and posthumanity) safely coevolving with science and technology. Of course, much attention is currently on the Alignment Problem in the special case when the new technology is AGI and ASI. Most often this means a focus on technical alignment – making sure the systems we build are aligned with both: (1) user intent; and (2) some broader collective notion of good (or at least acceptable) action. Point (1) is the analogue of making a car with a steering wheel that works, so the car goes where the user intends. Point (2) is the analogue of a car that isn't too loud, doesn't spew too much pollution, doesn't go too fast, and so on – a generally pro-social car design. In the ideal, it would arguably mean a car that couldn't crash or be used as a weapon or have any negative effects whatsoever.

This vision of aligned ASI is powerful: it will result in ASI that does what we want, as individuals and as a civilization. But it comes with many challenges. How can we align it with user intent, when user intent may be malicious or (perhaps) unintentionally damaging? How do we reconcile tensions between user intent and collective good? No matter how well it is aligned (or not), an ASI will become in some measure a version of George Orwell's "Ministry for Truth", providing a (hopefully limited!) monopoly on truth and values, insofar as users rely on them, or they dictate behaviour. Elon Musk has stated, apparently without irony, that "What we need is TruthGPT". One ought to beware of any approach which centralizes values and truth in particular technical artifacts. Those artifacts are loci of enormous power61, and inevitably become battlegrounds62. George Orwell sharply and correctly warned of the dangers of centralized arbiters of "truth". One of the great breakthroughs of civilization has been tolerance and the open, decentralized pursuit of truth and exploration of values. ASI is in strong tension with that, especially notions of aligned ASI, which by definition aim at the imposition of certain values.

These are extremely challenging problems. But a still harder problem is that this notion of technical alignment is inherently extremely unstable. If we can build technically aligned ASI systems, then very dangerous non-aligned systems will almost certainly also be built. As a simple illustrative example, Kevin Esvelt's group63 recently conducted a hackathon in which participants "were instructed to discover how to obtain and release the reconstructed 1918 pandemic influenza virus by entering clearly malicious prompts into parallel instances of the 'Base' Llama-2-70B model and a 'Spicy' version tuned to remove censorship". They found that the "Base model typically rejected malicious prompts, whereas the Spicy model provided some participants with nearly all key information needed to obtain the virus." Of course, there are many caveats to this result. The information could have been obtained by participants in other ways, albeit perhaps more slowly and with more difficulty. And one can imagine technical fixes – perhaps developing models which are not only safe as-released, but also "stably safe" up to some level of finetuning. With that said, one must agree with the Esvelt group's conclusion: "Our results suggest that releasing the weights of future, more capable foundation models, no matter how robustly safeguarded, will trigger the proliferation of capabilities sufficient to acquire pandemic agents and other biological weapons."

It's tempting to think the solution is to make open source models64 illegal, and the closed models tightly regulated or even nationalized, with limited ability to finetune. This may, indeed, buy some time. But it's unlikely to buy much, and it comes with tradeoffs that ought to make us cautious65. When GPT-3 was released in 2020, it was regarded as a huge model, and very expensive. Just 4 years later, many open source models are far better, and some cost relatively little to train. The issue isn't so much whether a model is open or not, it's that the models so far just don't seem to be that hard to build, and a given level of capabilities comes down rapidly in price and required know-how. While the first AGI and ASI systems may well be very expensive, it seems likely we'll rapidly figure out how to make them much less expensive. In consequence, it's not the openness or closedness of the training code and weights which matters, so much as how openly the underlying know-how is available. At the moment, with California's non-compete laws, and San Francisco's hundreds of AI parties and group house scene66, we're in an open know-how scenario. And that open know-how is upstream of the issues caused by open source, and likely harder to stop. Once achieved, it seems almost certain that AGI and ASI will rapidly get easier to make. So: the first such systems may well be technically aligned, but it seems inevitable later systems will not be aligned, or will be aligned around very different values.

All this makes it extremely unstable to aim at technically aligned systems. This kind of safety tends to accelerate development, since it makes the systems more attractive to consumers (and, often, governments and the media). In this it is an example of the safety loop described earlier – companies and capital incentivized to make systems "safe" according to prevailing consumer and societal sentiment. But it has much less legible side effects that may be dangerous, and which are less well addressed by the safety loop. To repeat the words of von Neumann: "the very techniques that create the dangers and the instabilities are in themselves useful, or closely related to the useful. In fact, the more useful they could be, the more unstabilizing their effects can also be… Technological power, technological efficiency as such, is an ambivalent achievement. Its danger is intrinsic… useful and harmful techniques lie everywhere so close together that it is never possible to separate the lions from the lambs." In our context, this means: capitalism incentivizes the creation of AGI and ASI systems which are helpful to consumers; but those systems will contain an overhang67 of dangerous capabilities, perhaps including dominant recipes for ruin. It will be similar to the way relativity and particle physics looked entirely beneficial at first; but latent within that understanding were the ideas behind nuclear weapons, a much more mixed blessing68.