Adapted from brief remarks to describe some of my past and current creative interests. Prepared for a workshop held November 2023 in San Francisco.

How to accelerate the progress of science and technology? This question has been at the heart1 of nearly all my creative work. Beyond working on specific scientific problems, I've also done a lot of work aimed at improving the broader tools and systems humans use for discovery. It's challenging to convey my career briefly, but a perhaps evocative slice is this list of my books and book-like projects (with apologies for immodesty in the descriptions):

I've done many projects apart from writing books! But I do love writing books, especially books that help catalyze and distill nascent research fields and creative communities. I find such proto-fields messy but irresistible. It's usually just you and a tiny handful of co-conspirators, wrestling with fundamental questions, amidst fog and confusion. Often there's no clear way to address those fundamentals. You see this in metascience today, for instance: right now we have only weak and incomplete ideas about how to empirically answer such basic questions as: when is one organizational approach to science better than another?

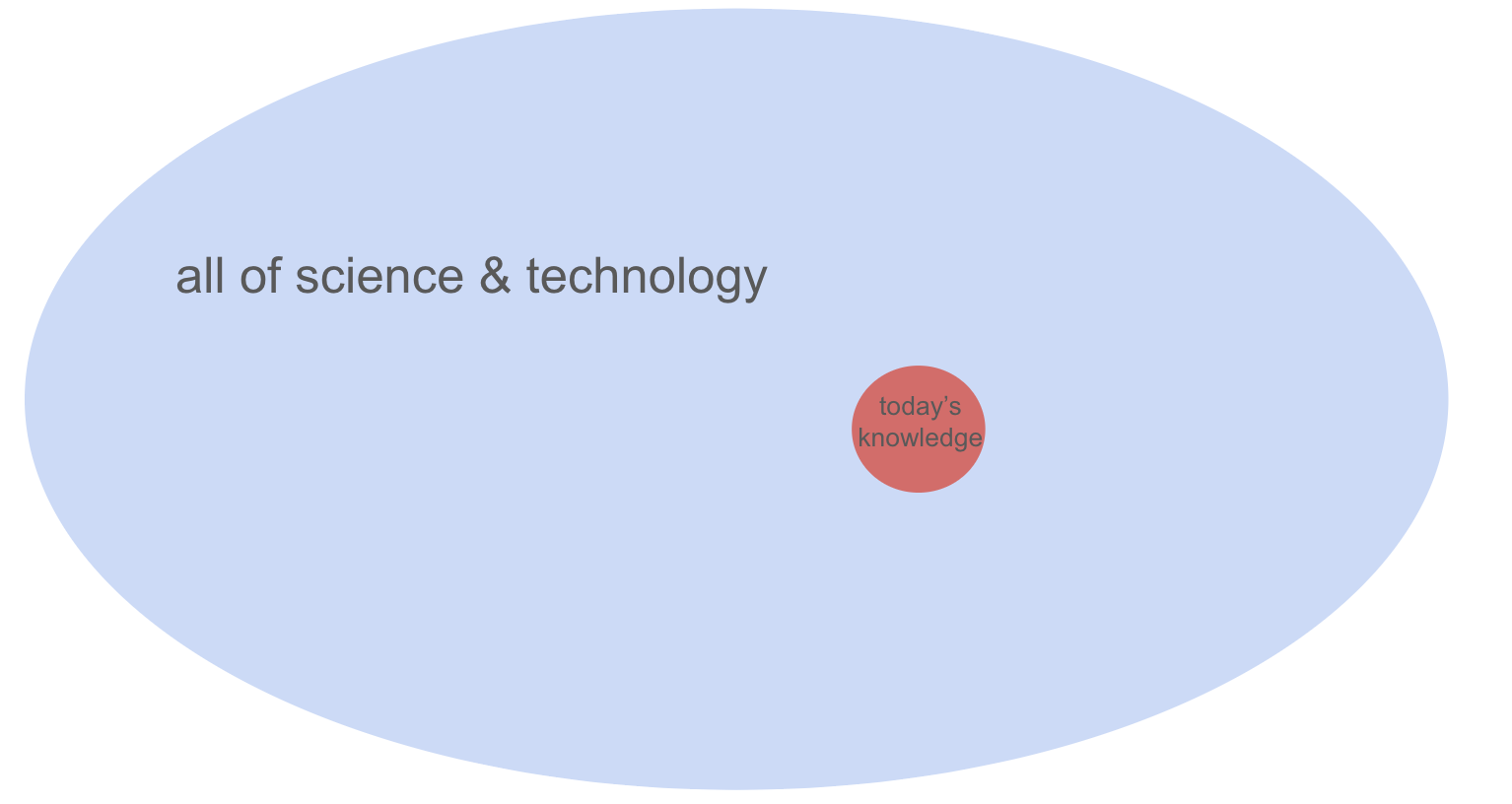

As you can see, my work has nearly all been about accelerating science and technology. And over the last year I find myself very conflicted. I've begun to question whether accelerating science and technology is a good idea at all. On the one hand, I believe we're still in the very early days of science and technology, and many wonderful things remain to be discovered:

On the other hand, I also wonder what dangerous things remain to be discovered. Suppose you could consult an oracle advisor who understood everything about science and technology. Not just about current day science and technology, but about all possible future science and technology. Such an oracle could certainly help you make many wonderful things. But I also wonder what dangerous things it could help you make? Might it be that scientific understanding will eventually and inevitably enable technologies which are both catastrophically dangerous and inherently ungovernable? If so, how can we prevent that from happening2?

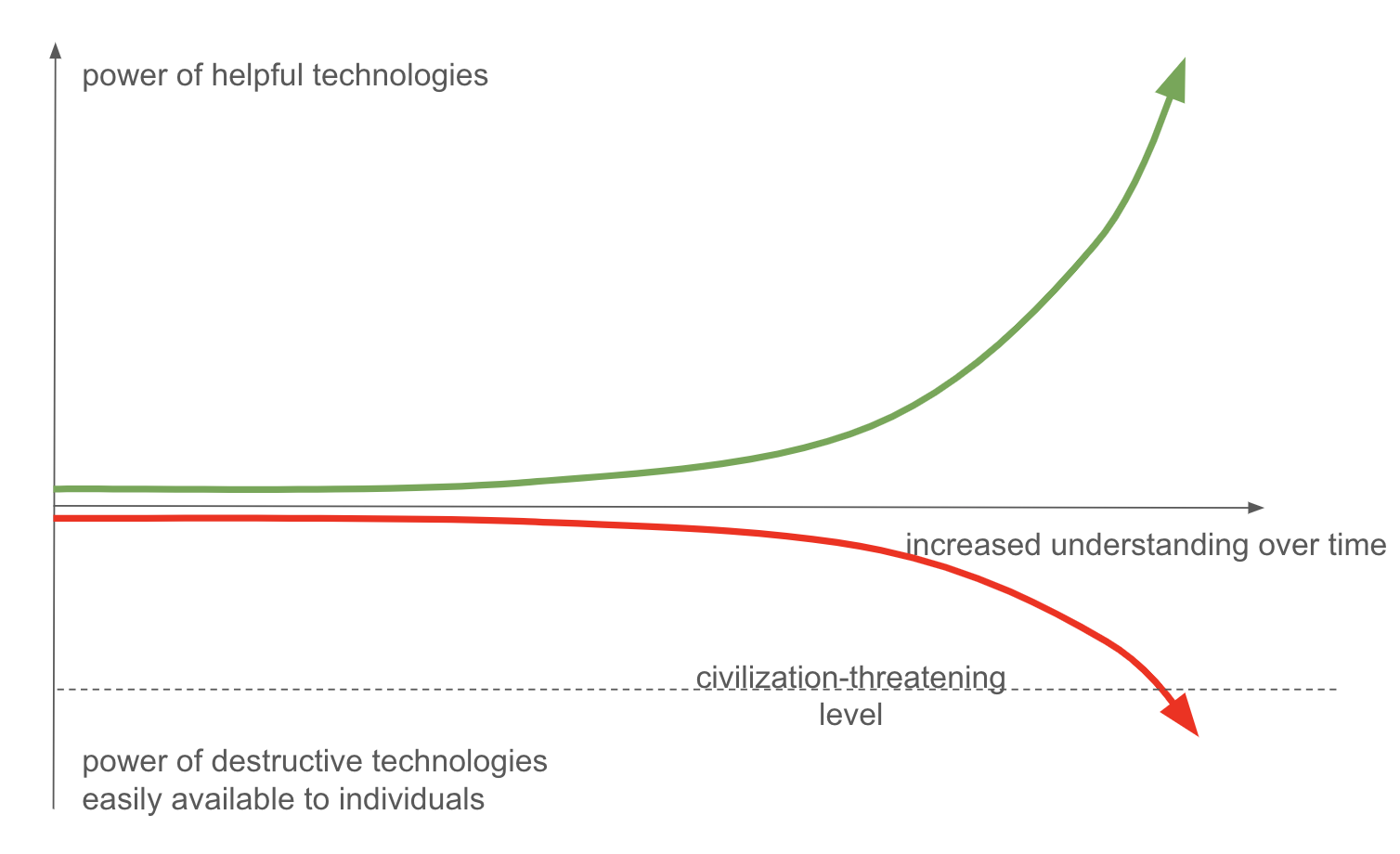

Put another way: as we improve our understanding over time, it makes more and more helpful technologies easily available to individuals. Unfortunately, the same progress also sees more and more destructive technologies also become easily available to individuals:

Our deepest understanding makes the universe more malleable in very general ways; as a result it tends to be intrinsically dual use, enabling both wonderful and terrible things. For instance: the insights of relativity and quantum mechanics helped lead to the transistor and many medical breakthroughs; they also led to the hydrogen bomb. It's not clear you can get the wonderful things without the terrible things. It's natural to wonder where this eventually leads. Will it become possible to make technologies both much more destructive than the hydrogen bomb, and also far easier to construct? This perhaps seems ridiculous, but the history of science and technology is filled with discoveries that from a prior point of view appeared impossible – indeed, sometimes even inconceivable – but which become routine. Graphite squiggles on tree pulp sometime help us recombine material elements to do the marvelous! Think of how impossible things like the steam engine, radio, the Wright flyer, or the iPhone would have appeared to the early Egyptians.

Stating the concern of the last paragraph more abstractly: will scientific knowledge eventually advance so much that we discover technologies which are: (a) trivial to make, perhaps even easily made by individuals who merely have the right knowledge; and (b) which threaten civilization?

These are what I call recipes for ruin, meaning simple, easily implementable but catastrophic technologies3.

There's been much recent discussion of specific possibilities for recipes for ruin – engineered pandemics, artificial superintelligence (ASI), and so on. Nick Bostrom has done an insightful analysis of the broader general possibility in his discussion of the "Vulnerable World Hypothesis"4. Recipes for ruin have also been explored for more than a century by science fiction writers, with sometimes fanciful examples including easily-made black holes, easily-made anti-matter bombs, destabilizing states of matter such as Ice-9 in Kurt Vonnegut's book "Cat's Cradle", and many more. Science fiction aside, there are many suggestive partial examples of recipes for ruin from the Earth's past, including: the chance creation of photosynthesis 2.4 billion years ago, which saw the earth's atmosphere go from oxygen-free to oxygenated, suffocating most organisms; the rise of self-replicating life, which utterly transformed the Earth; and even exothermic reactions such as fire. If the Earth's atmosphere were a little more oxygen-rich then fire would be significantly worse, and intense firestorms much more common; it is perhaps likely we wouldn't be here to contemplate the possibility.

Indeed, it's not obvious there aren't still unknown hard-to-ignite but catastrophic reactive pathways. Famously, Edward Teller and his collaborators Emil Konopinski and Cloyd Marvin considered this possibility in 1946, when they wrote a remarkable report5 investigating whether hydrogen bombs might set the world's entire atmosphere alight in a self-sustaining reaction. They concluded, correctly, that they wouldn't. But, as has been pointed out by Toby Ord6, a similar calculation missed a crucial reactive pathway in the 1954 Castle Bravo nuclear test. One missing neutron in that later analysis resulted in a 15 megatonne yield rather than 5 megatonnes, and killed many innocent people. It's a good thing the error was in the Castle Bravo analysis, and not the atmospheric ignition analysis.

It's tempting to dismiss discussion of such recipes for ruin as fearful catastrophizing. Progress comes out of optimistic plans! But what is a wise foundation from which to make such optimistic plans? Even if you put aside immediate concerns like pandemics or ASI, there's a fundamental set of questions here about the long-run development and governance of science and technology. Most basically, and to reiterate my earlier question: will a sufficiently deep understanding of nature make it almost trivial for an individual to make catastrophic, ungovernable technologies?

This is a question about the very long run of science and technology. For most of my life I've ignored this question, focusing on more immediate concerns. But I've come to believe it is a central question for our civilization. If you believe the answer is "no, such technologies will never be discovered", then you may think the existing systems of governance we have are sufficient. But if you believe, as I do, that the answer is "yes, such technologies may be discovered", then the question becomes: what institutional ideas or governance mechanisms can we use to stay out of this dangerous realm? Put another way: is a wise accelerationism possible? If so, how? And if not, what to do about it? Or to put it still another way, as done by one of the great scientist-technologists to ever live, John von Neumann: "can we survive technology"7?

All of this challenges a principle I've always held dear: that science is on net good, benefiting humanity, and should be pursued aggressively, with constraints only applied locally to obviously negative applications. We may, for example, ban things like leaded gasoline, but we certainly don't ban the kind of basic research that forms the background to such inventions. But I now doubt the broader principle, and wonder what to replace it with. Crucially, any replacement must still give us most of the benefits of science and technology – indeed, perhaps even accelerate many aspects of it – but without irreversibly exposing us to catastrophic danger8. I'm by temperament an extremely optimistic person, but I'm really struggling with this set of questions.

The conventional response is that we deal with this using governance feedback loops. As an example: I grew up thinking humanity would likely suffer tremendously from: acid rain; the ozone hole; nuclear war; peak oil; climate change; and so on. But in each case, humanity responded with tremendous ingenuity – things like the Vienna and Montreal protocols helped us deal with the ozone hole; many ideas (Swanson's Law, carbon taxes, and so on) are helping us deal with climate change; and so on. The worse the threat, the stronger the response; the result is a co-evolution9: while we develop destructive technologies, we also find ways of governing them and blunting their negative effects. But if a sufficiently powerful technology is sufficiently easy for individuals to make, I don't see how the usual governance feedback loop is sufficient. It must be replaced by something stronger. What sorts of strengthening would work? And that's what I'm thinking about: what governance principles would ensure a healthy co-evolution of science and technology with society? What I like about this is that while I'm finding it an extremely challenging question, it's also a fundamentally optimistic and positive place to stand. Thanks for your attention!

Of course, it's not the only drive underlying my past work. But it's very important to me, and I've focused on it here because it's in strong tension with my current work, of which more anon.↩︎

I will spend the rest of this talk on these questions. But I want to mention three other broad active and enduring creative interests. Believe it or not, these are all tied together. But explaining that's a subject for another day! They are, very briefly: (1) the synthesis of the secular and the religious; how religion will change as we transition to posthumanity; religion-as-an-atheist; (2) art in all forms; the relationship between art and mathematics; the rogue scientist-artist-designer; and (3) programming languages for matter, especially (but not only) the design of new phases of matter. Let me also say: I'd like to collaborate more; collaboration with people I come to love and admire is tremendously important to me!↩︎

I've discussed recipes for ruin more in my Notes on Existential Risk from Artificial Superintelligence, and expect to soon publish a more extended treatment.↩︎

Nick Bostrom, "The Vulnerable World Hypothesis", Global Policy (2019).↩︎

Emil J. Konopinski, Cloyd Marvin, and Edward Teller, Ignition of the Atmosphere with Nuclear Bombs (1946).↩︎

Toby Ord, "The Precipice" (2020).↩︎

John von Neumann, "Can We Survive Technology?" (1955).↩︎

I've been extremely disappointed by the attitude of certain gung ho (but sometimes rather thoughtless) technologists, who take the attitude that only almost unfettered acceleration is good. They often seem to confuse their power to act – which isolates them from immediate consequences – with being correct. To paraphrase Feynman, for a successful technology, reality takes precedence over what even the powerful desire, for Nature cannot be fooled.↩︎

This is, of course, an analogy, not co-evolution in quite the sense meant by biologists, although there are some interesting similarities.↩︎